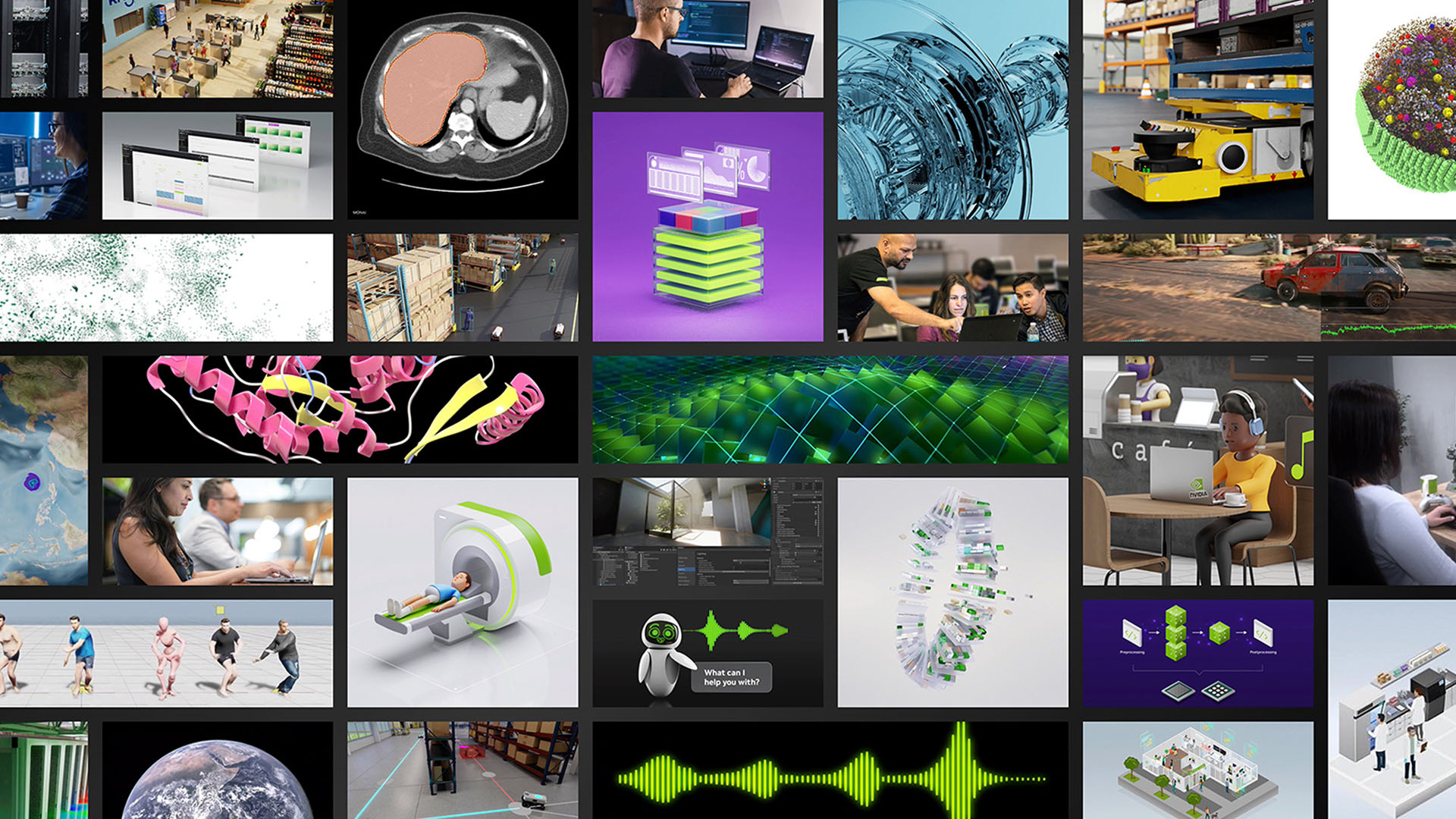

Developer Resources for the Public Sector

A hub of news, SDKs, technical resources, and more for developers working in the public sector.

Frameworks and SDKs

NVIDIA NeMo

NVIDIA NeMo? is an end-to-end platform for developing custom generative AI—including large language models (LLMs), multimodal, vision, and speech AI—anywhere.

Learn More About NeMo

NVIDIA Morpheus

NVIDIA Morpheus is a GPU-accelerated, end-to-end AI framework that enables developers to create optimized applications for filtering, processing, and classifying large volumes of streaming cybersecurity data.

Learn More About Morpheus

NVIDIA Omniverse

NVIDIA Omniverse? is a modular development platform of APIs and microservices for building 3D applications and services powered by Universal Scene Description (OpenUSD) and NVIDIA RTX?.

Learn More About Omniverse

NVIDIA Holoscan

NVIDIA? Holoscan is a domain-agnostic AI computing platform that delivers the accelerated, full-stack infrastructure required for scalable, software-defined, and real-time processing of streaming data running at the edge or in the cloud.

Learn More About Holoscan

NVIDIA Isaac Sim

NVIDIA Isaac Sim? is a reference application enabling developers to design, simulate, test, and train AI-based robots and autonomous machines in a physically based virtual environment.

Learn More About Isaac Sim

NVIDIA Metropolis

NVIDIA Metropolis is an advanced collection of developer workflows and tools to build, deploy, and scale vision AI and generative AI from the edge to the cloud.

Learn More About Metropolis

NVIDIA RAPIDS

NVIDIA RAPIDS? is an open-source suite of GPU-accelerated data science and AI libraries with APIs that match the most popular open-source data tools.

Learn More About RAPIDS

MatX

MatX is a modern C++ library for numerical computing on NVIDIA GPUs and limited support for CPUs.

Learn More About MatX

Application-Specific Resources

Retrieval-Augmented Generation: A New Frontier in Governmental Efficiency

Learn the basics of RAG and how its different use cases can help federal agencies achieve a new standard of efficiency.

Watch Video (49:37)

Deploying LLMs in a Resource-Constrained Environment

Government-sponsored projects often come with restrictions. Learn how to effectively deploy LLMs in a resource-constrained environment for government applications.

Watch Video (26:45)

Beyond RAG Basics: Building Agents, Co-Pilots, Assistants, and More

Discover how building LLM-powered agents requires using various tools, extracting information from multiple data sources, working with different modalities, and more.

Watch Video (01:20:07)

Retrieval-Augmented Generation: Overview of Design Systems, Data, and Customization

Explore the potential of RAG as well as the design of end-to-end RAG systems, including data preparation and retriever and generator models.

Watch Video (56:55)

Power Your AI Projects With New NVIDIA NIM Microservices for Mistral and Mixtral Models

With NVIDIA NIM?, shorten the time it takes to build AI applications for production deployments, enhance AI inference efficiency, and reduce operational costs.

Read Blog

Translate Your Enterprise Data Into Actionable Insights With NVIDIA NeMo Retriever

NeMo Retriever is a collection of microservices enabling semantic search of enterprise data to deliver highly accurate responses using retrieval augmentation. Learn how to talk to your data and make faster, smarter decisions.

Read Blog

Building Lifelike Digital Avatars With NVIDIA ACE Microservices

Discover how to bring digital avatars to life with generative AI and take four state-of-the-art AI models and implement them into an end-to-end digital avatar solution.

Read Blog

How to Apply Generative AI to Improve Cybersecurity

Dive into the latest innovations and technologies that will help you develop and deploy high-performance AI pipelines that can scale to provide complete visibility across your network and better, faster threat detection.

Watch Video (49:18)

Accelerating Security Vulnerability Management With Generative AI and RAG

Gain hands-on, instructor-led experience in using large language models, generative AI, RAG, and machine learning techniques to rapidly identify and mitigate security vulnerabilities.

Watch Video (03:31:28)

How to Build an Application for Generative AI-Based Spear Phishing Detection

Learn how to build an AI workflow that helps address data gaps by using LLMs and generative AI to enrich spear-phishing detection models.

Watch Video (01:08:23)

Digital Fingerprinting to Detect Cyber Threats DLI Course

Get hands-on experience developing and deploying the NVIDIA fingerprinting AI workflow that enables 100% data visibility and drastically reduces the time to detect threats.

Enroll Now

Applying Generative AI for CVE Analysis at an Enterprise Scale

Learn how leveraging AI agents and retrieval-augmented generation add intelligence to common vulnerabilities and exposures (CVE) analysis.

Read Blog

Best Practices for Securing LLM-Enabled Applications

Learn about the common risks associated with LLMs and best practices for ensuring your LLM-enabled applications are secure.

Read Blog

Enhancing Anomaly Detection in Linux Audit Logs With AI

Discover how to use NVIDIA Morpheus cybersecurity AI framework to detect anomalies in Linux audit logs using generative AI and accelerated computing.

Read Blog

Robotics in the Age of Generative AI

Explore the implications for the future of collaborative robotics and human-centered AI and how generative AI is the key to understanding physical interaction.

Watch Video (50:18)

Human-Level Performance With Autonomous Vision-Based Drones

See how autonomous vision-based drones can achieve unprecedented speed and robustness by relying solely on onboard computing.

Watch Video (42:45)

Elevate Your Robotics Game: Unleash High Performance With Isaac ROS & Isaac SIM

Learn about the NVIDIA Isaac? Robot Operating System with a hands-on tutorial showing how to use the Isaac ROS dev container and how to run Isaac ROS perception packages on NVIDIA? Jetson?.

Watch Video (01:35:30)

Bringing Generative AI and Vision AI to Production at the Edge With Metropolis Microservices for Jetson

Explore the latest NVIDIA Metropolis microservices for Jetson and how to bring powerful generative AI and vision AI applications to production.

Watch Video (47:10)

Closing the Sim-to-Real Gap: Training Spot Quadruped Locomotion With NVIDIA Isaac Lab

Discover how ?to seamlessly deploy quadrupeds from the virtual to the real world with NVIDIA Isaac Lab.

Read Blog

Supercharge Robotics Workflows With AI and Simulation Using NVIDIA Isaac Sim 4.0 and NVIDIA Isaac Lab

In this blog, we show how the latest release of Isaac Sim 4.0 brings powerful new features and AI enhancements to robotics simulation.

Read Blog

Step Into the Future of Industrial-Grade Edge AI With NVIDIA Jetson AGX Orin Industrial

Explore how to deploy AI and compute for sensor fusion in these complex environments with the NVIDIA Jetson edge AI and robotics platform.

Read Blog

Transforming 2D Imagery Into 3D Geospatial Tiles With Neural Radiance Fields

Traditional workflows for transforming images into a 3D scene tend to be slow and cumbersome. Explore how neural radiance fields simplify creating 3D content from overlapping 2D images.

Watch Video (49:00)

Addressing AV Deployment Policy Issues and Introducing a New Digital Proving Ground

Deploying autonomous vehicles requires the adoption of robust and effective policies. Find out how a new digital proving ground will play a pivotal role in shaping the future of transportation.

Watch Video (50:16)

Enhancing Digital Twins With AI for Wildland Fire Management

See how Lockheed Martin has demonstrated how to reconstruct actual wildland fire incidents in a 3D space by employing Omniverse Kit, Nucleus, and Universal Scene Description.

Watch Video (39:19)

Ray-Tracing RF-Propagation Digital Twin Solution for 6G Applications Using NVIDIA Omniverse

See how a digital twin of a radio environment offers seamless progression from ray-tracing simulation to real lab-based emulation.

Watch Video (49:18)

How to Use 3D Geospatial Data for Immersive Environments With Cesium

Discover how to process 3D geospatial data to enhance the realism, accuracy, and effectiveness of simulations across various domains.

Read Blog

How to Train an Object Detection Model for Visual Inspection With Synthetic Data

Learn how to harness synthetic data for training models on diverse, randomized data that closely resemble real-world scenarios and address dataset gaps.

Read Blog

Transferring Industrial Robot Assembly Tasks From Simulation to Reality

Find out how to use reinforcement learning for challenging robotic assembly tasks and seamlessly transfer tasks from simulation to reality.

Read Blog

A Hacker's Guide to Using GenAI and Software-Defined Radio for RF Spectrum Exploration

Learn how to use generative AI in conjunction with sensor processing applications and software-defined radio to listen to and record radio frequency signals.

Watch Video (46:53)

NVIDIA Holoscan, the AI Sensor Processing Platform, From Surgery to Satellites

Learn how Holoscan enables developers to build AI-enabled sensor processing pipelines with speedy data movement, accelerated compute, real-time visualization, and super low-latency AI inferencing.

Watch Video (23:10)

Transforming 2D Imagery Into 3D Geospatial Tiles With Neural Radiance Fields

Traditional workflows for transforming images into a 3D scene tend to be slow and cumbersome. Explore how neural radiance fields simplify creating 3D content from overlapping 2D images.

Watch Video (49:00)

Out of the Lab and Into the Field: A Model for Modern RF Systems

Learn how accelerated compute and networking hardware enables experimental research software to be accelerated for mission-critical applications, running on commercial off-the-shelf hardware in deployed radar systems.

Watch Video (51:07)

A New Era of Sensor Processing

New system architectures are needed to keep up with the growing number of high-bandwidth, low-latency processing requirements. Learn about the future of sensor processing architectures.

Watch Video (47:06)

Performant Object Recognition Model Trained With On-Demand Synthetic Data

Explore how real-world performance of a model trained from synthetic data can be reliable and performant and how to ensure viable data is generated to create a useful training set.

Watch Video (46:20)

Disaster Risk Monitoring Using Satellite Imagery

Learn how to build and deploy a deep learning model to automate the detection of flood events using satellite imagery. ?

Enroll Now

NVIDIA Deep Learning Institute

The NVIDIA Deep Learning Institute (DLI) offers hands-on training in AI and accelerated computing to solve real-world problems. Training is available as self-paced, online courses or instructor-led workshops.

Generative AI With Diffusion Models

Take a deeper dive on denoising diffusion models, which are a popular choice for text-to-image pipelines, disrupting several industries.

Enroll NowEasily Develop Advanced 3D Layout Tools on NVIDIA Omniverse

Get hands-on experience with NVIDIA Omniverse—the platform for connecting and creating physically accurate, 3D virtual worlds. Learn how to build your own custom scene layout in Omniverse with hands-on exercises in Omniverse Code and Python.

Enroll NowBuilding AI-Based Cybersecurity Pipelines

Learn how to build Morpheus pipelines to process and perform AI-based inference on massive amounts of data for cybersecurity use cases in real time.

Enroll NowFundamentals of Accelerated Data Science

Learn how to build and execute end-to-end GPU-accelerated data science workflows that enable you to quickly explore, iterate, and get your work into production.

Enroll NowPrograms For You

Developer Resources

The NVIDIA Developer Program provides the advanced tools and training needed to successfully build applications on all NVIDIA technology platforms. This includes access to hundreds of SDKs, a network of like-minded developers through our community forums, and more.

Accelerate Your Startup

NVIDIA Inception—an acceleration platform for AI, data science, and HPC startups—supports over 7,000 startups worldwide with go-to-market support, expertise, and technology. Startups get access to training through the DLI, preferred pricing on hardware, and invitations to exclusive networking events.

NVIDIA News for the Public Sector

NVIDIA GB200 NVL72 Delivers Trillion-Parameter LLM Training and Real-Time Inference

Fueled by the new NVIDIA Blackwell architecture, the groundbreaking NVIDIA GB200 NVL72 arrives to power a new era of computing and generative AI with unparalleled performance, efficiency, and scale.

NVIDIA NIM Offers Optimized Inference Microservices for Deploying AI Models at Scale

Unlock the full potential of your generative AI models with NVIDIA NIM. Transform the way you deploy these models across diverse platforms, including cloud, data center, and workstation.