NVIDIA NeMo Evaluator for Developers

NVIDIA NeMo Evaluator? microservice simplifies the end-to-end evaluation of generative AI applications, including retrieval-augmented generation (RAG) and agentic AI with an easy-to-use API. It provides LLM-as-a-judge capabilities, along with a comprehensive suite of benchmarks and metrics for a wide range of custom tasks and domains, including reasoning, coding, and instruction-following.

You can seamlessly integrate NeMo Evaluator into your CI/CD pipelines and build data flywheels for continuous evaluation, ensuring AI systems maintain high accuracy over time. NeMo Evaluator’s flexible cloud-native architecture allows you to deploy it wherever your data resides, whether on-premises, in a private cloud, or across public cloud providers. This enables a faster time-to-market by making it easy to set up and launch evaluation jobs, allowing you to evaluate more efficiently.

See NVIDIA NeMo Evaluator in Action

Watch this demo to learn how you can leverage NeMo microservices to customize and evaluate AI agents for tool calling. You will also learn how to install the microservices using a Helm chart and interact with them through APIs.

How NVIDIA NeMo Evaluator Works

NeMo Evaluator provides an easy-to-use API that lets you evaluate generative AI models—including large language models (LLMs), embedding models, and re-ranking models. Simply provide the evaluation dataset, model name, and type of evaluation in the API payload. NeMo Evaluator will then initiate a job to evaluate the model and provide the results as a downloadable archive.

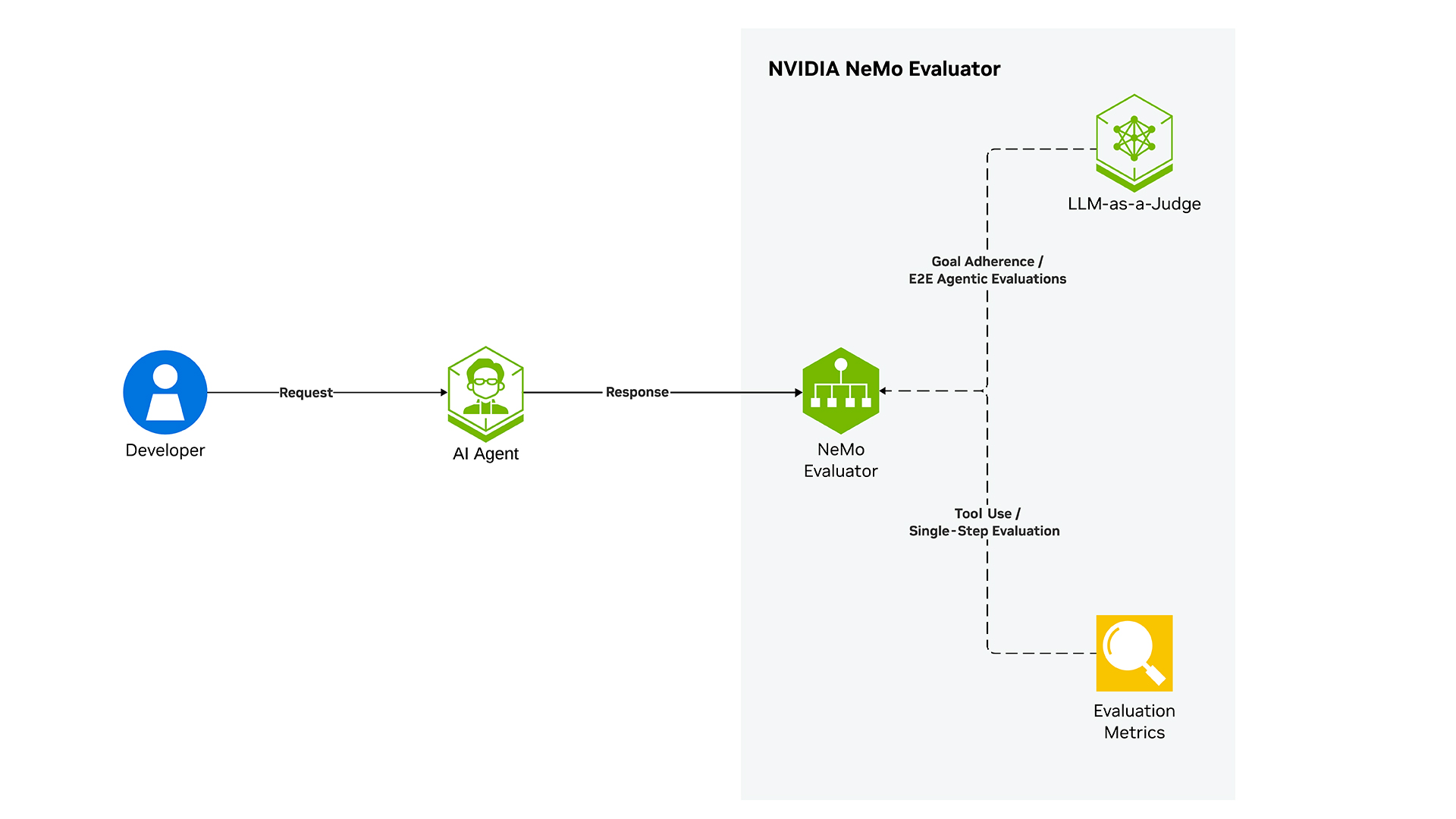

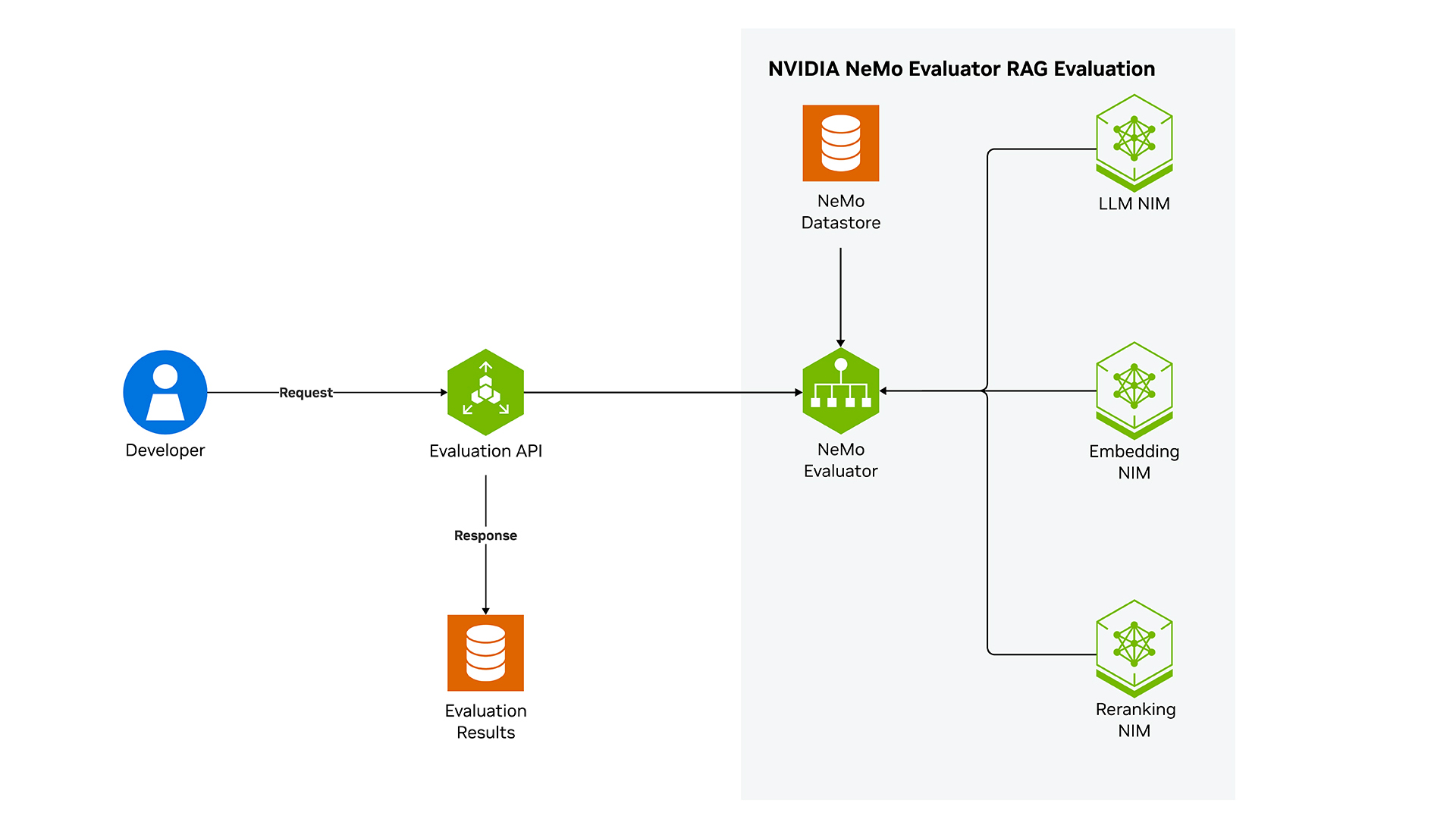

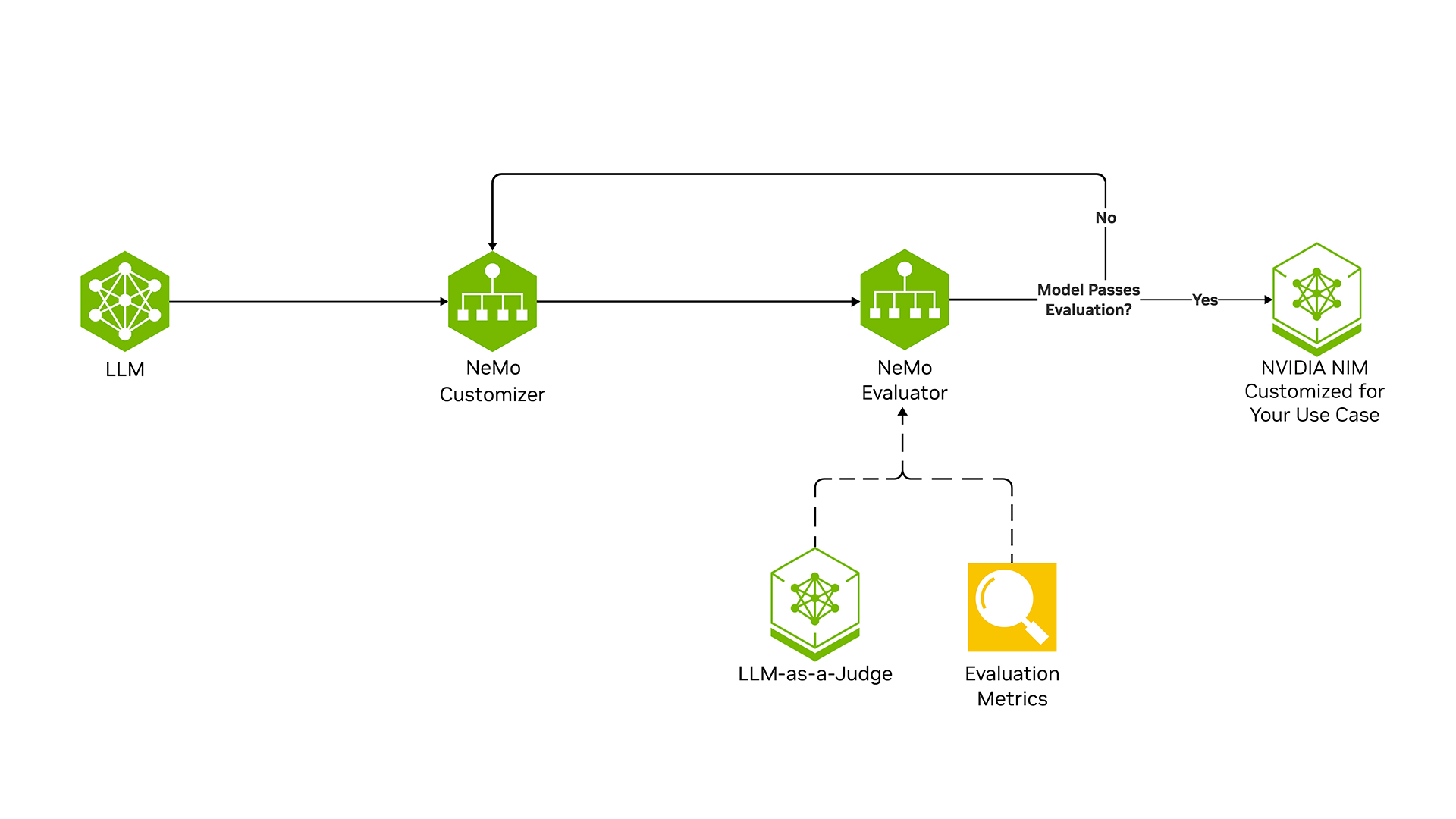

The architecture diagrams below illustrate the flow for using NeMo Evaluator for assessing the performance of various generative AI systems.

Evaluating AI Agents

NeMo Evaluator provides a custom metric to evaluate the tool calling for AI agents. Using this metric, you can evaluate whether the agent called the right function with the right parameters.

Alternatively, you can also evaluate the outputs of the agents with LLM-as-a-judge.

Evaluating RAG Pipelines

For retrieval-augmented generation pipelines, you can evaluate step-by-step by assessing accuracy metrics for the LLM generator, embedding, and reranking models.

NeMo Evaluator also supports offline evaluation—simply provide the RAG queries, responses, and metrics in the API payload.

Evaluating Custom Models

To tailor models for enterprise needs, use NeMo Customizer and NeMo Evaluator together and keep refining and testing until you achieve the desired accuracy, and evaluate on academic benchmarks to ensure no regression in accuracy.

The customized model can be deployed as NVIDIA NIM? microservices for higher throughput and lower latency.

Introductory Resources

Introductory Blog

Read how NeMo Evaluator simplifies end-to-end evaluation of generative AI systems.

Read BlogTutorial Notebook

Explore tutorials designed to help you evaluate generative AI models with the NeMo Evaluator microservice.

GTC Session

Understand how NeMo Evaluator, along with other NeMo microservices, facilitates the customization of generative AI models and supports ongoing performance evaluation, ensuring models remain relevant and effective over time.

How-To Blog

Dive deeper into how NVIDIA NeMo microservices help build data flywheels with a case study and a quick overview of the steps in an end-to-end pipeline.

Ways to Get Started With NVIDIA NeMo Evaluator

Use the right tools and technologies to assess generative AI models and pipelines across academic and custom benchmarks on any platform.

Develop

Get free access to the NeMo Evaluator microservice for research, development, and testing.

Deploy

Get a free license to try

NVIDIA AI Enterprise in production for 90 days using your existing infrastructure.

Performance

NeMo microservices provides simple APIs to launch customization and evaluation jobs. For an end-to-end customization job, NeMo microservices take only 5 calls compared to 21 steps with other libraries.

Simplify Generative AI Application Evaluation With NeMo Evaluator

The benchmark represents the number of steps for end-to-end evaluation of a customization job with NeMo microservices and a leading open-source alternative library.

Starter Kits

Start evaluating your generative AI applications using the following features from NeMo Evaluator.

LLM-as-a-Judge

LLM-as-a-judge is used whenever traditional evaluation methods are impractical, due to subjectivity. It helps assess open-ended responses, compare model outputs, automate human-like judgments, and evaluate RAG or agent-based systems.

This approach is useful when no single correct answer exists, ensuring structured scoring and consistency. LLM-as-a-judge covers a wide range of scenarios, including model evaluation (MT-Bench), RAG, as well as agent.

Similarity Metrics

NeMo Evaluator supports evaluation of custom datasets specific to enterprise requirements. These evaluations use similarity metrics like F1-score and ROUGE score to measure how well LLM or retrieval models handle domain-specific queries.

Similarity metrics help enterprises determine whether the model can reliably answer user questions and maintain consistency across different scenarios.

Academic Benchmarks

Academic benchmarks are widely used by model publishers to assess performance across various tasks and domains.

These benchmarks, such as MMLU (for knowledge), HellaSwag (for reasoning), and GSM8K (for math), provide a standardized way to compare models against various dimensions. With NeMo Evaluator, model developers can quickly check for regression after customization.

NVIDIA NeMo Evaluator Learning Library

More Resources

Ethical AI

NVIDIA believes Trustworthy AI is a shared responsibility, and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse. Please report security vulnerabilities or NVIDIA AI concerns here.

Get started with NeMo Evaluator today.