NVIDIA DeepStream SDK

DeepStream’s multi-platform support gives you a faster, easier way to develop vision AI applications and services. You can even deploy them on premises, on the edge, and in the cloud with just the click of a button.

What is NVIDIA DeepStream?

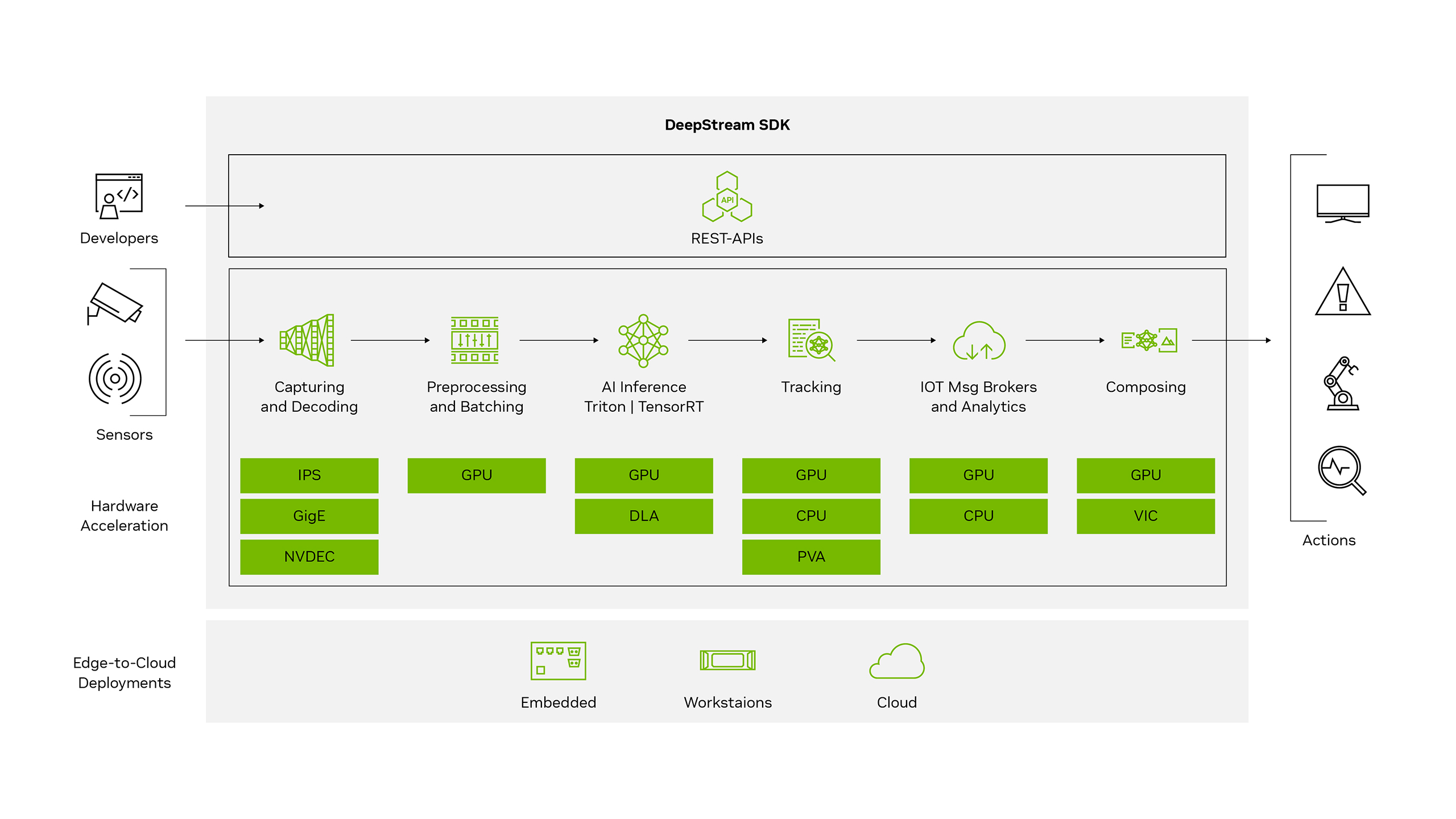

NVIDIA’s DeepStream SDK is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It’s ideal for vision AI developers, software partners, startups, and OEMs building IVA apps and services.

You can now create stream-processing pipelines that incorporate neural networks and other complex processing tasks like tracking, video encoding/decoding, and video rendering. These pipelines enable real-time analytics on video, image, and sensor data.

Benefits

Powerful and Flexible SDK

DeepStream SDK is ideal for a wide range of use cases across a broad set of industries.

Multiple Programming Options

Create powerful vision AI applications using C/C++, Python, or Graph Composer’s simple and intuitive UI.

Real-Time Insights

Understand rich and multi-modal real-time sensor data at the edge.

Managed AI Services

Deploy AI services in cloud native containers and orchestrate them using Kubernetes.

Reduced TCO

ncrease stream density by training, adapting, and optimizing models with TAO toolkit and deploying models with DeepStream.

Unique Capabilities

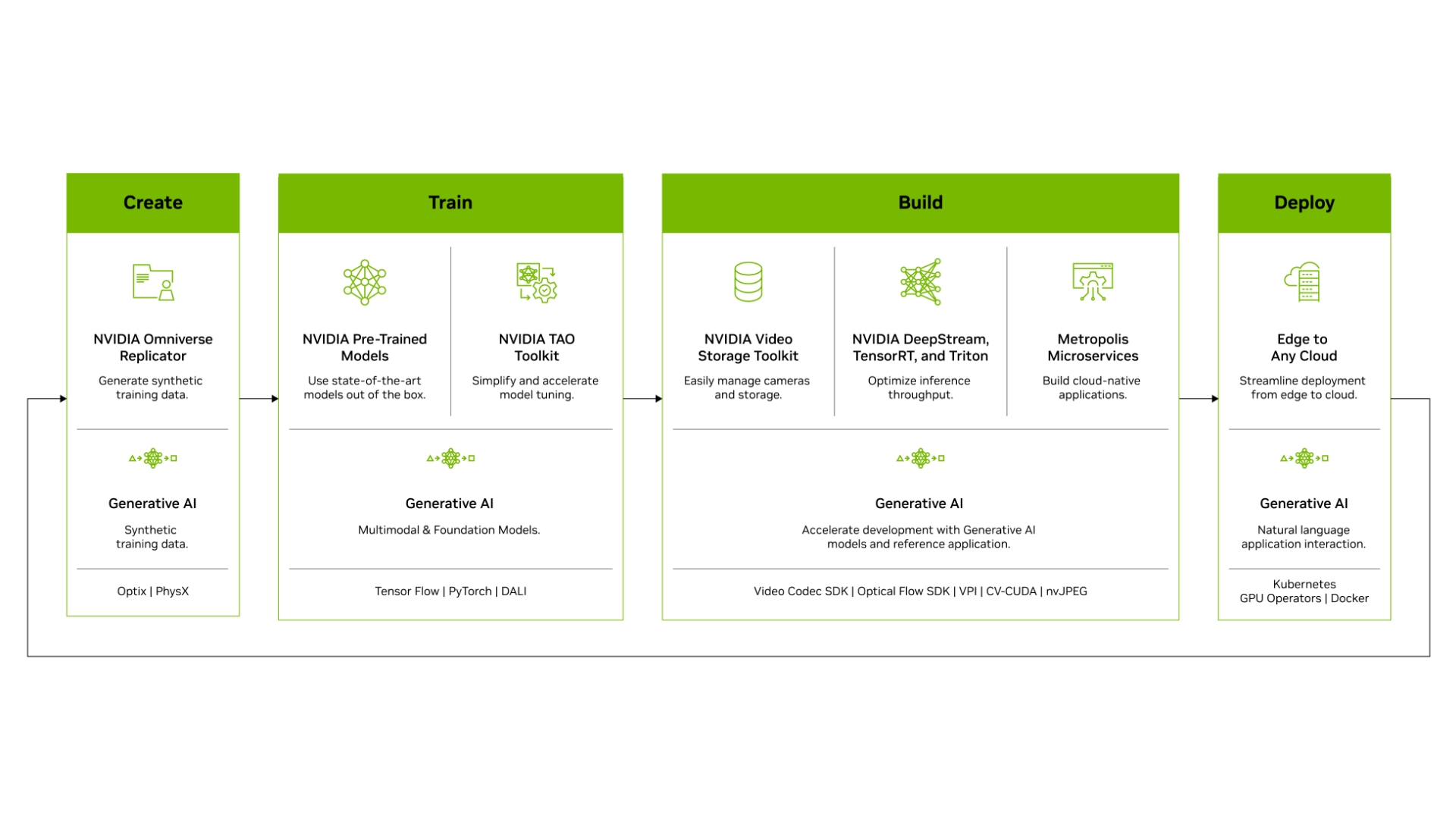

Enjoy Seamless Development From Edge to Cloud

DeepStream gives you a faster, easier way to build seamless streaming pipelines for AI-based video, audio, and image analytics. It ships with 40+ hardware-accelerated plugins and extensions to optimize pre/post processing, inference, multi-object tracking, message brokers, and more. Plus, it offers some of the world's best-performing real-time, multi-object trackers.

Use DeepStream’s off-the-shelf containers to easily build cloud native applications that can be deployed on public and private clouds, on workstations powered with NVIDIA GPUs, or on NVIDIA Jetson. Its “develop once, deploy anywhere†approach simplifies code management and provides great scalability. The DeepStream Container Builder tool also makes it easier to build high-performance, cloud-native AI applications with NVIDIA NGC containers that are easily deployed at scale and managed with Kubernetes and Helm Charts.

DeepStream REST-APIs let you manage multiple parameters at run-time, simplifying the creation of SaaS solutions. With standard REST-API interface, you can build web portals for control and configuration or integrate into your existing applications.

Build End-to-End AI Solutions

Speed up overall development efforts and unlock greater real-time performance by building an end-to-end vision AI system with NVIDIA Metropolis. Start with production-quality vision AI models, adapt and optimize them with TAO Toolkit, and deploy using DeepStream.

Get incredible flexibility–from rapid prototyping to full production level solutions–and choose your inference path. With native integration to NVIDIA Triton? Inference Server, you can deploy models in native frameworks such as PyTorch and TensorFlow for inference. Using NVIDIA TensorRT? for high-throughput inference with options for multi-GPU, multi-stream, and batching support also helps you achieve the best possible performance.

PipeTuner 1.0, a new developer tool, now makes it easy to tune wide range of parameters to optimize AI pipelines for inference and tracking

Accelerate Vision AI Development

The DeepStream SDK is bundled with 30+ sample applications designed to help you kick-start your development efforts. Most samples are available in C/C++, Python, and Graph Composer versions and run on both NVIDIA Jetson and dGPU platforms. With support for Windows Subsystem for Linux (WSL2), you can now develop in Windows environments without the need to access remote Linux systems.

DeepStream Service Maker simplifies the development process by abstracting the complexities of GStreamer to easily build C++ object-oriented applications. Use Service Maker to build complete DeepStream pipelines with a few lines of code

DeepStream Libraries powered by CV-CUDA, NvImageCodec, and PyNvVideoCodec that offers low-level GPU-accelerated operations to optimize pre and post stages of vision AI pipelines.

Graph Composer gives DeepStream developers a powerful, low-code development option to create complex pipelines and quickly deploy them using Container Builder.

Create Next-Generation AI Applications

Tight scheduling control, custom schedulers, and efficient resource management are all critical to integrating with deterministic systems such as robotic arms and automated quality control lines.

With the introduction of Graph eXecution Format (GXF), it’s easy to integrate with control signals that operate on a different time domain than the vision streaming sensors being processed by a DeepStream pipeline.

New reference applications help you jumpstart development of Generative AI applications. And new support for sensor fusion, BEVFusion, adds both lidar and radar inputs that can be fused with camera inputs bringing a new range of use cases for developers.

Get Production-Ready Solution for Vision AI

DeepStream is available as a part of NVIDIA AI Enterprise, an end-to-end, secure, cloud-native AI software platform optimized to accelerate enterprises to the leading edge of AI.

NVIDIA AI Enterprise delivers validation and integration for NVIDIA AI open-source software, access to AI solution workflows to speed time to production, certifications to deploy AI everywhere, and enterprise-grade support, security, and API stability to mitigate the potential risks of open-source software.

Explore Multiple Programming Options

C/C++

Create applications in C/C++, interact directly with GStreamer and DeepStream plug-ins, and use reference applications and templates.

Python

DeepStream pipelines can be constructed using Gst Python, the GStreamer framework's Python bindings. The source code for the binding and Python sample applications are available on GitHub.

Graph Composer

Graph Composer is a low-code development tool that enhances the DeepStream user experience. Using a simple, intuitive UI, processing pipelines are constructed with drag-and-drop operations.

Improve Accuracy and Real-Time Performance

| Jetson Orin Nano | Jetson Orin NX | Jetson Orin AGX? | T4 | A2 | A10 | A30 | A100 | H100 | L40 | L4 | Quadro (A6000) | A4000 | L4000 | ARM SBSA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Application | Models | Tracker | Infer Resolution | Precision | GPU | GPU | DLA1 | DLA2 | GPU | DLA1 | DLA2 | GPU | GPU | GPU | GPU | GPU | GPU | GPU | GPU | GPU | GPU | GPU | GPU |

| People Detect | PeopleNet-ResNet34 (v2.3.3) | No Tracker | 960x544 | INT8 | 256 | 372 | 175 | 175 | 970 | 329 | 329 | 912 | 610 | 2059 | 3273 | 4952 | 6920 | 4443 | 1674 | 2787 | 1282 | 1512 | 6977 |

| PeopleNet-ResNet34 (v2.3.3) | NvDCF (Accuracy) | 960x544 | INT8 | 82 | 128 | 77 | 77 | 318 | 196 | 196 | 429 | 295 | 1009 | 1229 | 2040 | 2936 | 1870 | 701 | 1301 | 746 | 623 | 3613 | |

| PeopleNet-ResNet34 (v2.3.3) | NvDCF (Performance) | 960x544 | INT8 | 215 | 315 | 170 | 170 | 625 | 310 | 310 | 866 | 568 | 2063 | 2806 | 4250 | 6103 | 4278 | 1563 | 2855 | 1277 | 1413 | 5788 | |

| License Plate Recognition |

TrafficCamNet

LPDNet LPRNet |

NvDCF |

960x544

640x480 96x48 |

INT8

INT8 FP16 |

120 | 180 | - | - | 370 | - | - | 382 | 253 | 1071 | 1327 | 2150 | 2801 | 2280 | 741 | 1404 | 788 | 670 | N/A |

| 3D Body Pose Estimation | PeopleNet-ResNet34 BodyPose3D | NvDCF |

960x544

192x256 |

INT8

FP16 |

28 | -40 | - | - | 76 | - | - | 101 | 67 | 160 | 128 | 151 | 255 | 226 | 200 | 235 | 148 | 104 | 313 |

| Action Recognition | ActionRecognitionNet (3DConv) | No Tracker | 224x224x3x32 | FP16 | 34 | 51 | - | - | 147 | - | - | 173 | 74 | 450 | 552 | 996 | 1270 | 870 | 313 | 638 | 319 | 300 | 1910 |

RTX GPUs performance is only reported for flagship product(s). All SKUs support DeepStream.

The DeepStream SDK lets you apply AI to streaming video and simultaneously optimize video decode/encode, image scaling, and conversion and edge-to-cloud connectivity for complete end-to-end performance optimization.

To learn more about the performance using DeepStream, check the documentation.

Read Customer Stories

Optimizing Operations at Bengaluru Airport

Industry.AI used the NVIDIA Metropolis stack, including DeepStream, to increase the safety and efficiency of the airport. Using vision AI, it was able to track abandoned baggage, flag long passenger queues, and alert security teams of potential issues.

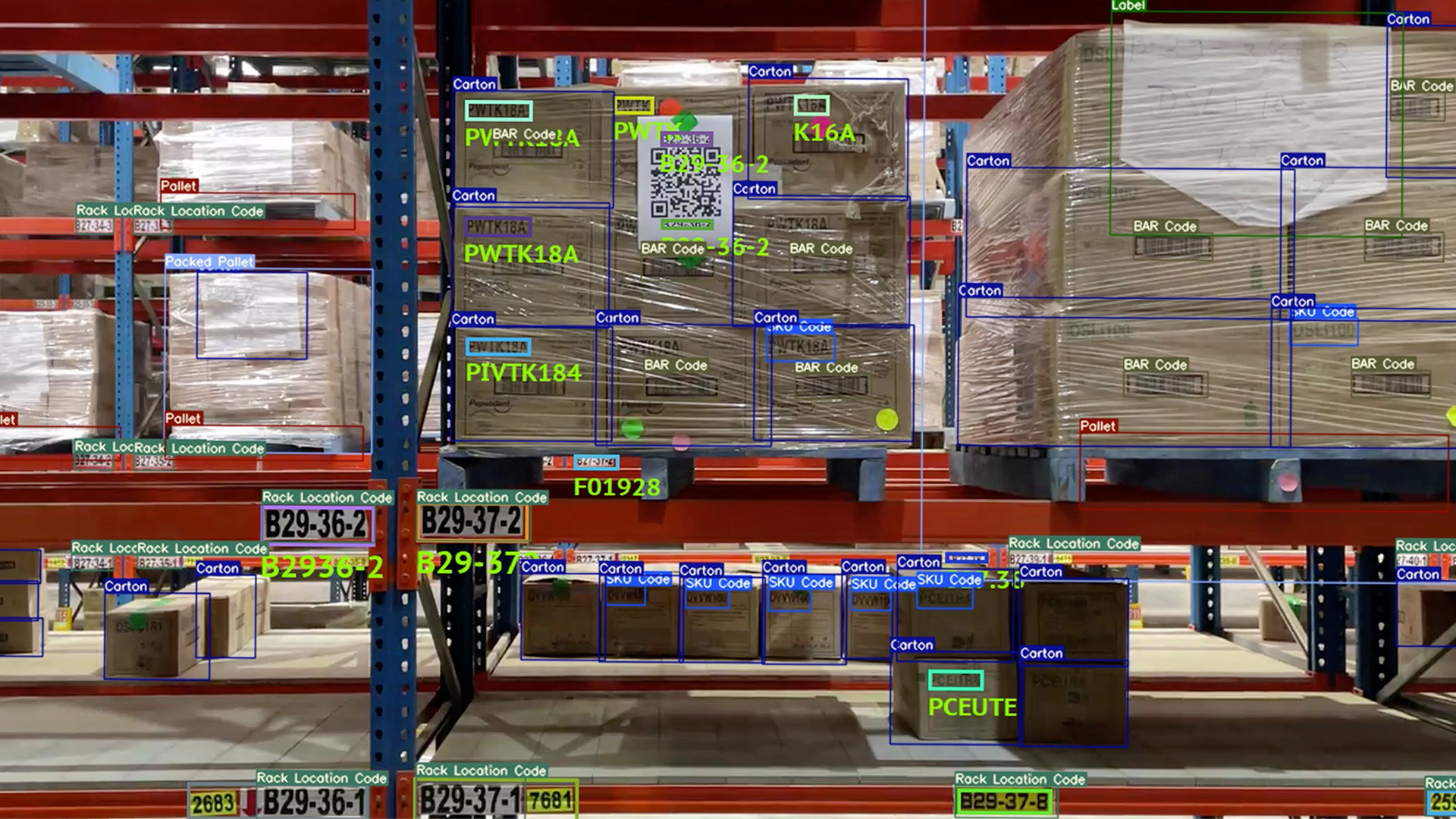

Enhancing Distribution Center Operation

KoiReader developed an AI-powered machine vision solution using NVIDIA developer tools that included DeepStream SDK to help PepsiCo achieve precision and efficiency in dynamic distribution environments.

Scaling AI-Powered Smart Spaces

FYMA used NVIDIA DeepStream and NVIDIA Triton? to improve AI-powered space analytics with frame rates exceeding previous benchmarks by 10X and accuracy by 3X.

General FAQ

DeepStream is a closed-source SDK. Note that sources for all reference applications and several plugins are available.

The DeepStream SDK can be used to build end-to-end AI-powered applications to analyze video and sensor data. Some popular use cases are retail analytics, parking management, managing logistics, optical inspection, robotics, and sports analytics.

See the Platforms and OS compatibility table.

Yes, that’s now possible with the integration of the Triton Inference server. Also with DeepStream 6.1.1, applications can communicate with independent/remote instances of Triton Inference Server using gPRC.

DeepStream supports several popular networks out of the box. For instance, DeepStream supports MaskRCNN. Also, DeepStream ships with an example to run the popular YOLO models, FasterRCNN, SSD and RetinaNet.

Yes, DS 6.0 or later supports the Ampere architecture

Yes, audio is supported with DeepStream SDK 6.1.1. To get started, download the software and review the reference audio and Automatic Speech Recognition (ASR) applications. Learn more by reading the ASR DeepStream Plugin

Build high-performance vision AI apps and services using DeepStream SDK.