Deep Learning Accelerator (DLA)

NVIDIA’s AI platform at the edge gives you the best-in-class compute for accelerating deep learning workloads. DLA is the fixed-function hardware that accelerates deep learning workloads on these platforms, including the optimized software stack for deep learning inference workloads.

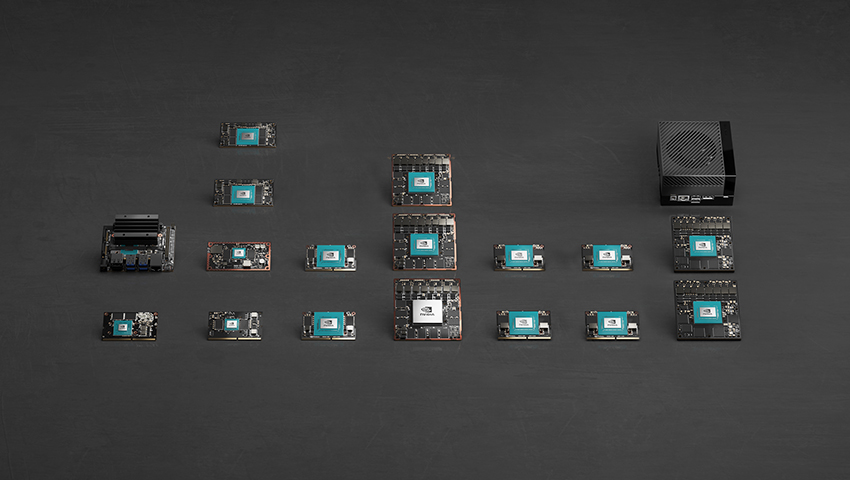

Edge AI Platforms

Take advantage of the DLA cores available on the NVIDIA Orin? and the Xavier? family of SoCs on the NVIDIA Jetson? and NVIDIA DRIVE? platforms.

NVIDIA Jetson

NVIDIA Jetson brings accelerated AI performance to the edge in a power-efficient and compact form factor. Together with the NVIDIA JetPack? SDK, these Jetson modules open the door for you to develop and deploy innovative products across all industries.

NVIDIA DRIVE

NVIDIA DRIVE embedded supercomputing solutions process data from camera, radar, and lidar sensors to perceive the surrounding environment, localize the car to a map, then plan and execute a safe path forward. This AI platform supports autonomous driving, in-cabin functions and driver monitoring, plus other safety features—all in a compact, energy-efficient package.

Hardware and Software Solutions

DLA Hardware

NVIDIA DLA hardware is a fixed-function accelerator engine targeted for deep learning operations. It’s designed to do full hardware acceleration of convolutional neural networks, supporting various layers such as convolution, deconvolution, fully connected, activation, pooling, batch normalization, and others. NVIDIA’s Orin SoCs feature up to two second-generation DLAs while Xavier SoCs feature up to two first-generation DLAs.

DLA supported layers

DLA Software

DLA software consists of the DLA compiler and the DLA runtime stack. The offline compiler translates the neural network graph into a DLA loadable binary and can be invoked using NVIDIA TensorRT?. The runtime stack consists of the DLA firmware, kernel mode driver, and user mode driver.

Working with DLA

DLA Workflow

DLA performance is enabled by both hardware acceleration and software. For example, DLA software performs fusions to reduce the number of passes to and from system memory. TensorRT also provides higher-level abstraction to the DLA software stack.

TensorRT delivers a unified platform and common interface for AI inference on either the GPU or the DLA, or both. The TensorRT builder provides the compile time and build time interface that invokes the DLA compiler. Once the plan file is generated, the TRT runtime calls into the DLA runtime stack to execute the workload on the DLA cores. TensorRT also makes it easy to port from GPU to DLA by specifying only a few additional flags.

Sample hereGPU Fallback

TLT pre-trained models on DLA

.jpg)

Benefits

Additional AI functionality

Port your AI-heavy workloads over to the Deep Learning Accelerator to free up the GPU and CPU for more compute-intensive applications. Offloading the GPU and CPU allows you to add more functionality to your embedded application or increase the throughput of your application by parallelising your workload on GPU and DLA. The two DLAs on Orin can offer up to 9X the performance of the two DLAs on Xavier.

Power Efficiency

The DLA delivers the highest AI performance in a power-efficient architecture. It accelerates the NVIDIA AI software stack with almost 2.5X the power efficiency of a GPU. It also delivers high performance per area, making it the ideal solution for lower-power, compact embedded and edge AI applications.

Robust Applications

Design more robust applications with independent pipelines on a GPU and DLA to avoid single point of failure. Combine traditional algorithms with AI algorithms for safety-critical or business-critical applications.

"Our team is using TensorFlow for model training, testing and developing. After we train the model in TensorFlow, we convert the model to TensorRT and we deploy the Xavier platform using NVDLA….By Using FP16 half-precision together with NVDLA we got more than 40x speedup".

— Zhenyu Guo, Director of Artificial Intelligence at Postmates X

Latest DLA News

Using Deep Learning Accelerators on NVIDIA AGX? Platforms

This talk presents a high-level overview of the DLA hardware and software stack. We demonstrate how to use the DLA software stack to accelerate a deep learning-based perception pipeline and discuss the workflow to deploy a ResNet 50-based perception network on DLA. This workflow lets application developers offload the GPU for other tasks or optimize their application for energy efficiency.

cuDLA: Deep Learning Accelerator Programming using CUDA

cuDLA is an extension of NVIDIA? CUDA? that integrates GPU and DLA under the same programming model. We'll dive into the basic principles in cuDLA and how developers can use it to quickly program the DLA for a wide range of neural networks.

Resources

Working with DLA

DLA supports various layers such as convolution, deconvolution, fully connected, activation, pooling, batch normalization, and more. More information on the DLA support in TensorRT can be found here.

Getting started with

DLA on Jetson (Tutorial) Working with DLA

Learn more about the latest DLA updates on Jetson AGX Orin

Check out these resources to learn more about the latest DLA architecture.

Jetson AGX Orin Series Technical Brief Webinar on the Jetson AGX Orin Series

Ask our experts questions on our forums

Searching for help on using the DLA with your applications? Check out our forums page to find answers to your questions.

Jetson Embedded Forums

Learn more about using the DLA on NVIDIA DRIVE

Find the latest documentation for working with the DLA in NVIDIA DRIVE here.

NVIDIA DRIVE DLA Resources